XR Guitar

Advanced Research Project - masters's course - Graded A

Platform

Meta Quest 2, WebMIDI

Tools used

Unity

Duration

7 weeks

Team Size

4

Roles

Project manager, Unity developer

In short

This was a project for a course intended to prepare us for the master's thesis. We developed an application for the Meta Quest 2 headset which was receiving data from a MIDI-guitar (a digital guitar) and displaying a virtual guitar in the place of the real guitar.

Regrettably, there is not much to showcase, as we were under significant time pressure to complete this project, often overlooking documentation for both the process and the final product. Nevertheless, it was an enjoyable and rewarding project that I take great pride in sharing

The situation

We partnered with a supervisor who was working in collaboration with a guitar museum and seeking students to create an 'XR guitar.' This innovative concept aimed to allow the player to use a physical guitar while its visual appearance would be augmented using XR (eXtended Reality) technology. Additionally, the application we were tasked with developing needed to replicate a 'B-bending' feature (while the real physical do not have this feature).

To facilitate this project, we were provided with a normal electric guitar, which we had the freedom to customize as necessary, and a MIDI pickup. MIDI, in this context, represents a digital musical notation that can be transmitted to a computer. The computer, in turn, can generate sound based on the notes played. The MIDI-pickup is attached to the electric guitar and connected via USB to a computer.

Target

I took on the role of project manager and, knowing that everyone in the group have a intense period with other courses, I knew it be best if I could quickly figure out what our main challenges were and research how we could tackle them.

There were two main challenges in this project. Firstly, where should we send the MIDI data (to the Quest 2 or a separate computer?) and how? Secondly, how can we map a virtual guitar to the location of a real guitar and replicate the B-bending feature?

Action

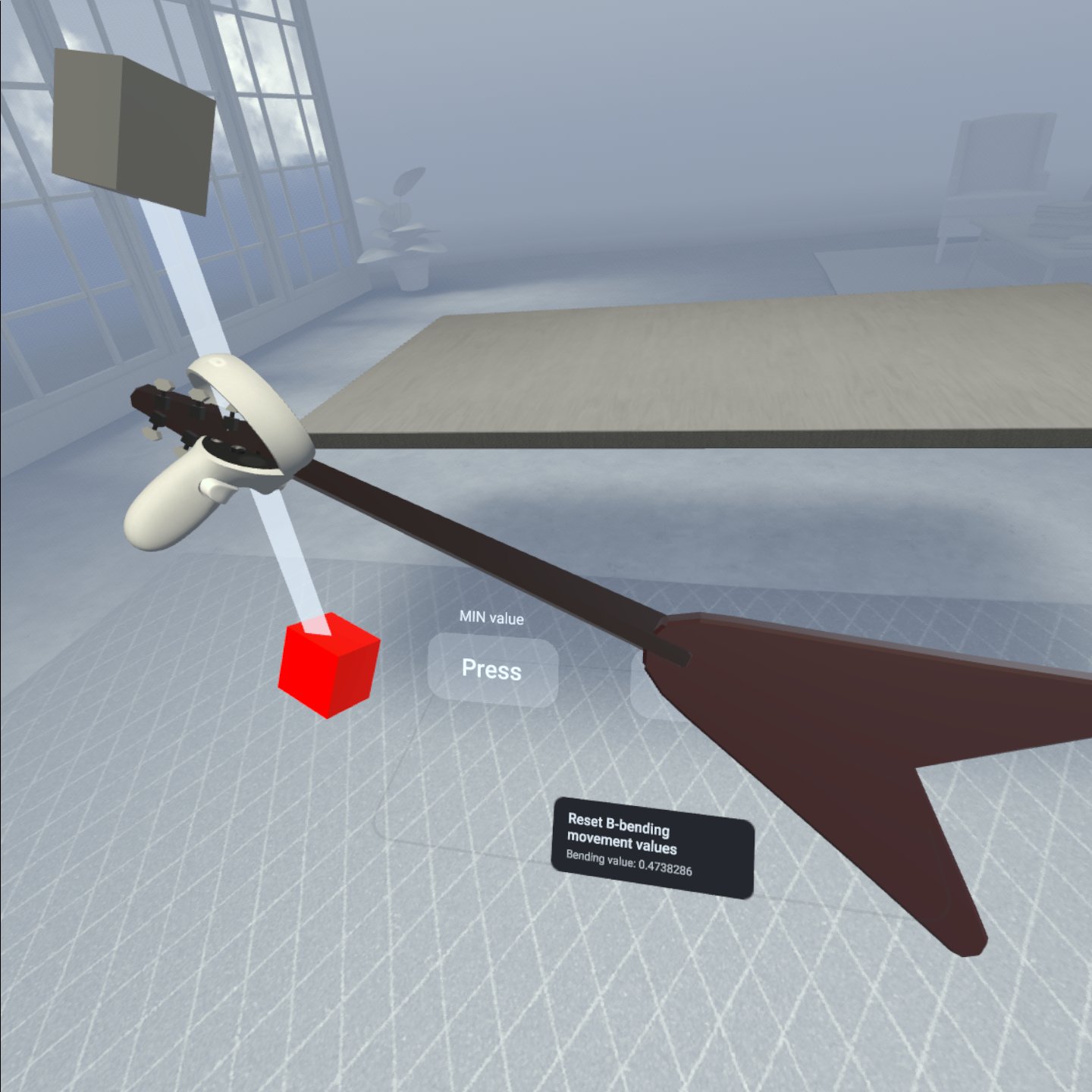

I extensively researched the two challenges and found several ways we could address them. The second challenge could be easily solved by just attaching one of the Quest 2 controllers to the real guitar and attach to the virtual guitar to the controller inside Unity. Then setting a "normal" position and "bending" position would allow the bending motion to be performed. More advanced solutions would be to use different types of trackers, such as Steam VR and HTC Vive trackers, or a XBOX Kitect to detect body movements.

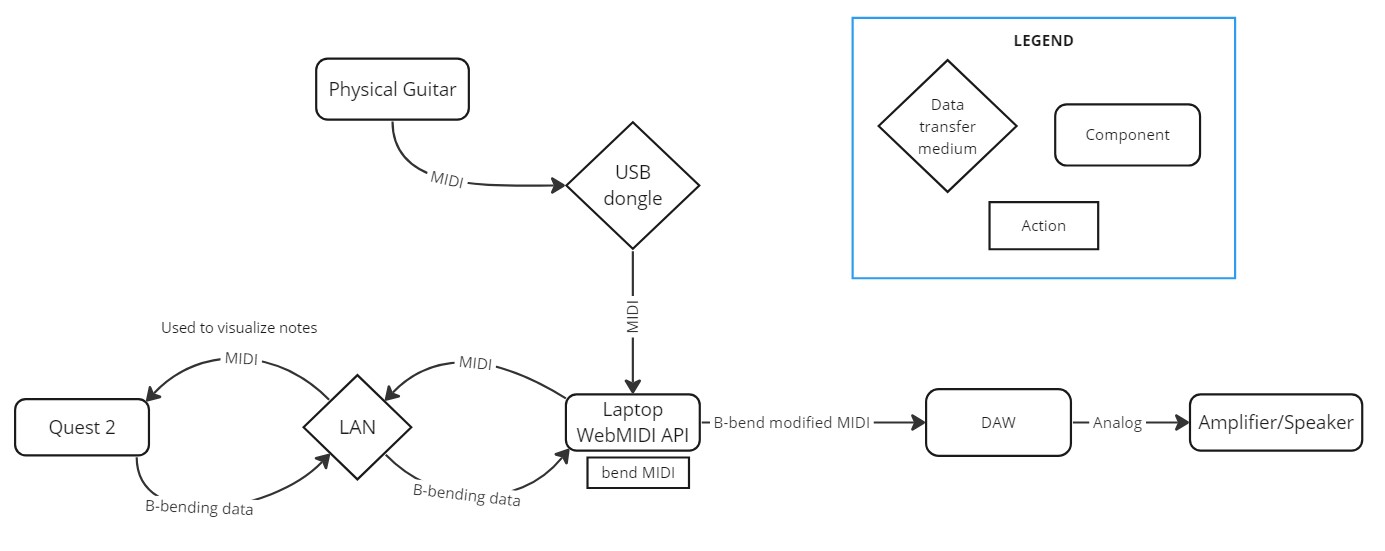

The second challenge was harder to find a convenient solution. We tried to send the MIDI data directly to the Quest 2 with a cable (hoping to modify the MIDI-data for B-bending in Unity), but the MIDI pickup was not recognized by the Quest 2. We could easily send the MIDI-data to a computer and play the guitar sounds in a digital audio workstation (DAW), but I could not figure out a simple way for the DAW to receive data from the Quest 2 about the user performing a B-bending action. I knew that we could send data easily through websockets in a local network and found that another MIDI application for Quest 2 (VRtuos) used something called WebMIDI API. I asked a member in the group, that was experienced with web development and JavaScript, to investigate whether he could use this API to setup a websocket connection to the Quest 2 (which he could). Below is the data model I created for this to make things clear for the group on how we could solve our challenge.

Result

With the above described data model we could send MIDI data to the Quest 2 to help visualize which strings and notes the user was playing on the virtual guitar. The Quest 2 could send a data parameter on how much B-bending the user wants. The computer could modify the MIDI data according to the B-bending parameter and finally send this MIDI to a software that played the MIDI audio.

The video at the top of the page shows a early version of the prtotype, the first time we got everything working as intended.

Below is the written scientific report explaining a lot more, such as our user evaluation, of which I wrote a major part of.

(You can download the pdf [here].)

Reflection

I, and probably the whole group, learned a lot during this project. About developing in Unity, the MIDI data, and scientific research on virtual instruments. The project goal was quite ambitious and in retrospect I believe that the group’s focus on the implementation of the project may have been at the expense of the scientific research itself (which was a big part of the course). For example, the user evaluation was not all too extensive. Doing the work again I would make sure a scientific research plan is worked on at the beginning, before starting to designing and developing the prototype.

The Quest 2 controller attached to the head of the guitar.

The Quest 2 controller attached to the head of the guitar. An early version of how the virtual guitar looked with a B-bending bar at the head. Buttons seen can reset the B-bending bar position.

An early version of how the virtual guitar looked with a B-bending bar at the head. Buttons seen can reset the B-bending bar position. The data model showing how data flowed between components.

The data model showing how data flowed between components.